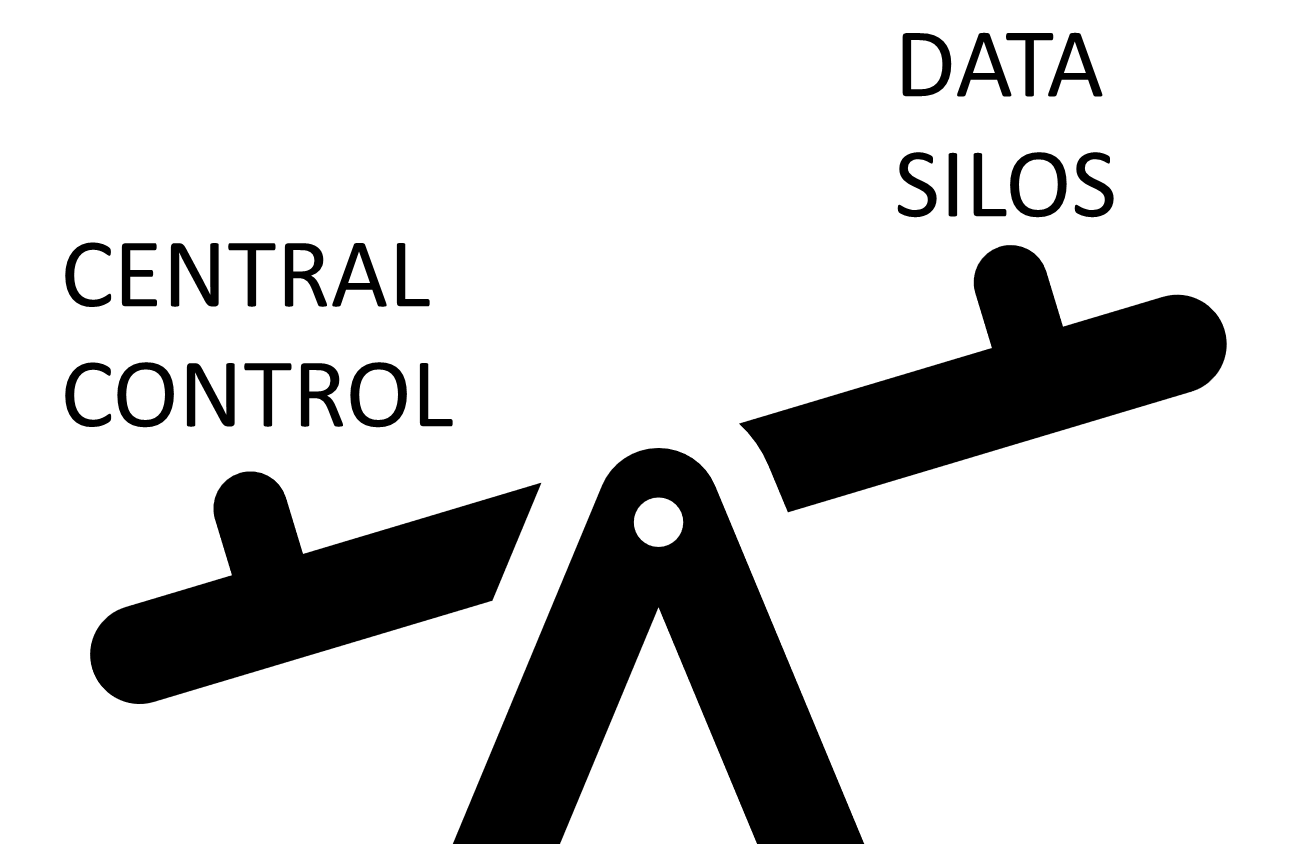

Enterprises have long been faced with a vicious tradeoff: maintain central control over how data is made available across (and sometimes even within) units, or let chaos reign and allow units to make data available to each other however they’d like.

The advantages of central control are many: it simplifies enforcement of security and privacy rules, facilitates maintenance of data quality, reduces complexity of data cataloging and search, enables tracking, control, and reporting of data usage, improves data integration, and increases the ability to support various guarantees related to data integrity and other data access service levels. Unfortunately, it has two major disadvantages: (1) it reduces organizational agility due to the necessary delay to get a new dataset under central control, and (2) the central team doing the data management often becomes an organizational bottleneck and limits scalability of data analysis.

Due to the agility and scalability challenges of centralized control, many enterprises are forced to allow data silos to exist: datasets that live outside of central control so that units that need to quickly make a dataset available without going through a central approval process can do so. Data silos are often created via an ad-hoc process: an individual or team takes some source data, enhances it in various ways, and dumps the result in a silo repository (such as a data lake) for themselves and/or others to use if they so wish. These data silos often have significant value in the short term, but rapidly lose value as time goes on. In some cases, data silos not only lose value over time, but actually become harmful to access due to outdated or incorrect data resulting from lack of maintenance, and failure to keep up with data privacy and sovereignty standards. In many cases, they were not created using approved data governance practices in the first place and present security vulnerabilities for the enterprise.

Even if they do not lose value, the value of a data silo is typically limited to the creators of the dataset, while the rest of the organization remains unaware of its existence unless they are explicitly notified and directly tutored in its syntax and semantics. Silos only become harder to find over time as institutional memory weakens with employee turnover and movement across units. Furthermore, due to lack of maintenance, they become harder to integrate with other enterprise data over time. Much effort is wasted in the initial creation of these data silos, yet their impact is limited in both breadth and time. [In some cases, the opposite is true: data silos have too much impact, where other units build dependencies on top of these silos that are deployed with unapproved data governance standards, and all the harmful side-effects of improperly governed data spreads across the enterprise.]

Thus, as enterprises push towards centralized control they lose agility, scalability, and breadth of vision. As they push away from centralized control, they lose data quality, security, and efficiency. To many, it becomes a no-win tradeoff.

The rise of data products

Data products, when implemented properly, are a promising way out of this vicious tradeoff. They require a fundamental shift in attitude on behalf of the data producers in the process of making data available within an organization. Whereas previously, making data available to other units involved handing it off to a centralized team, or dumping it into a data silo repository, now a host of new responsibilities fall upon the data producers. It is no longer acceptable to “code, growed, and offload” a dataset, handing off responsibility to others for governance and adherence to organizational best practices. Rather, the original data producers, or a related team within an organization, are charged with a long term commitment to making the dataset available, and maintaining its long term data quality.

They must think beyond the immediate set of groups within the organization that currently want access to the dataset, but must think more generally: who are the ultimate “customers” of this data product? What do they need to be successful? What are their expectations about the contents of the product? How can we communicate generally the contents and semantics of the dataset so it can be accessed and used by a generic “customer”?

The essential feature of data products is that they do not require ownership, or even approval from a central authority. They thus avoid the agility and scalability problems of centralized data management. However, they do not completely reject all aspects of the centralized control approach we mentioned above. An organization can still require that all data products are physically stored and accessed in a single software system (or across a small number of different systems) in order to maintain global security, and tracking, control, and reporting of data usage. Furthermore, a central team can specify the set of data governance rules that data product creators must adhere to. And they can maintain a global database of identifiers associated with customers, suppliers, parts, orders, etc. that data products must use when referring to shared conceptual entities. However, none of these centralized activities are done on a per-dataset basis which would bottleneck the introduction of new data products into the ecosystem.

Nonetheless, the data product approach is closer to the data silo side of the tradeoff than the centralized control side. Any team — as long as they take long term responsibility for the dataset — can create a data product and make it available immediately. Just like traditional real-world products, some products will become successful and widely-used, whereas others will end up in obscurity or go “out of business”. However, unlike the data silo approach, going “out of business” is an explicit decision by the team in charge of the data product to stop supporting the ongoing maintenance obligations of the product and removing its availability.

![]()

Additionally, just as traditional real-world products come with an expectation of documentation on how to use the product, a number to call when there are issues with the product, and an expectation that the product will evolve as customer requirements change, so too do data products. And just as traditional products need to take advantage of marketplaces such as Amazon to broadcast the availability of their product to potential customers, and must provide the requisite metadata about their product so that it can appear in the appropriate places when searched for, so too organizations need to create marketplaces for data products (and which may include an approval process) so that data product producers and consumers can find each other.

The burden on data product producers

The primary downside of the data product approach is the increased burden on the data producers (or the team designated by the data producers) to keep the dataset productized. To keep a dataset usable, secure, and properly integrated with other datasets over time requires both initial and ongoing effort. In the centralized approach, most of this effort is done by the centralized team. In the data silo approach, much of this effort never occurs. However, in the data product approach, this effort is distributed across an organization in the direction of the data producers.

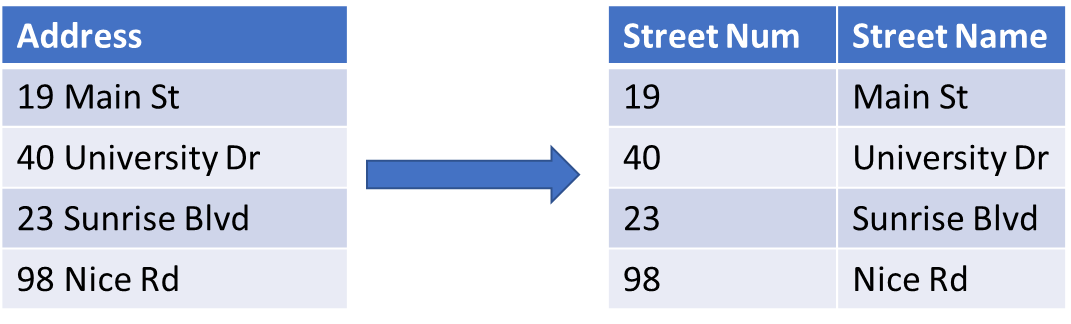

As a simplified example, an organization may decide that street number and name information should (ideally) be stored as separate fields within datasets across the enterprise:

The burden for making this change (while also supporting backwards compatibility for applications that used previous versions of the data product) falls on the product maintainers.

Similarly, if a new data product comes along that is designed to represent a global view of all customer data (id, name, address, etc) stored across the entire enterprise, all other data products that refer to customer information may be asked to include references to the customer identifiers used by the global customer data product, in order to improve integration with other datasets that refer to an overlapping set of customers. Even if not directly asked to do so, the team maintaining a data product should ideally independently realize the value of this potential integration, and add the additional foreign references on their own initiative, in order to increase the value of their data product.

Furthermore, it is critical that a comprehensive set of metadata is maintained about the data product so that it can appear in organizational data catalogs, knowledge graphs, and searches. This includes ensuring that the semantics of a dataset are accurately represented so that others can correctly use it. Support must be provided for people who need help understanding and using it, and a feedback mechanism put in place so that the dataset can be improved to meet customer needs over time.

All of these things take significant effort, and the success of the data product approach is entirely dependent on the availability and willingness of the teams creating and maintaining data products to perform it.

Not only must they be available and willing, they must also have the ability to perform these tasks in the first place. Some of the tasks involved in preparing a data product require various facets of technical knowledge — for example, data integration may improve with machine learning techniques, data orchestration involved in making the product available, understanding of data cataloging and knowledge graphs, database administration, etc. Although it is (somewhat) straightforward to ensure a centralized team has all of these skills, it is not at all guaranteed that these skills are present on each independent data product team.

Data fabric to the rescue?

The data fabric is an enterprise-wide data management approach that leverages an array of intelligent software and data management best practices to weave diverse data together across an enterprise by using metadata to drive machine learning and automation tools that make datasets easy to find, integrate, deploy, and query. For example, when a new dataset gets incorporated into a data fabric, software tools extract metadata about this new dataset from a combination of the dataset itself and the source system(s) that generated this dataset, so that it can be cataloged in centralized metadata repositories and ultimately integrated with other related datasets. Machine learning tools aid in performing this integration process, which includes performing data cleaning and transformation activities, along with making recommendations on potential uses for this new dataset, and potential downstream users, and implementing the required data governance standards as it relates to this new dataset (such as setting up access controls). [The data fabric also includes automation of various data orchestration and data analysis functions, but these facets of the data fabric are less relevant to our present discussion.]

As I’ve described in past writings, the central theme of the data fabric is to use software to automate various functions that are today either performed by humans or otherwise neglected because of the excessive human effort. This leads to the critical question: can the data fabric be used to automate the creation of data products? If the answer to this question is ‘yes’, seemingly that would be HUGE. We’ve already invested 1500 words in this article (so far) explaining the downsides of the data centralization vs. data silo tradeoff, that data products get around this tradeoff, and that the main disadvantage of data products is the human effort involved. If the data fabric can eliminate or reduce that human effort, we could get all the benefits of data products without the main cost.

The good news is that the answer to this question is mostly ‘yes’, except for some data product features that necessarily require human actions, such as a number to call to get human help with the dataset. The bad news is that the good news is not as good as it initially seems.

For example, given a new source dataset that an enterprise gets access to, the data fabric can certainly package it and turn it into a data product. It can do some basic data cleaning and transformations of this new dataset. It can extract some important metadata and get it inserted into enterprise-wide data catalogs and knowledge graphs. It can deploy the dataset into enterprise analytical tools such as data warehouses or lake-houses and query systems, and make recommendations to downstream applications. It can even provide some basic documentation about this dataset and recommended uses based on the metadata it extracted. And it can automate many data governance functions, and evolve these functions over time as requirements change.

Is the result of all this automated work a valid data product? Yes, absolutely! But would downstream applications actually benefit from the existence of this data product? There — the answer is ‘only sometimes’.

To understand why this is the case, we must remember that data products are supposed to parallel the concept of regular, tangible products that one would buy from a store. How many products that you buy today are developed with little-to-no human involvement? That new toaster? Washing machine? Book? Sunglasses? Probably very few. The process of developing a valuable product is fundamentally a creative process. It involves combining wisdom and deep understanding of what people want and need, in order to create something novel that customers can potentially benefit from. Machines and machine learning are very good at finding patterns and making recommendations or predictions based on those patterns. However, they are very bad at creativity. For example, machines can do very advanced activities — including going so far as driving a car — if those activities involve taking actions based on past direct experiences and patterns that can be learned. But if you put the self-driving car in a totally unforeseen circumstance that requires creativity and ingenuity to properly navigate, it doesn’t work.

It remains an area of great debate if a machine will ever be able to write a book along the lines of Harry Potter that people will ever want to read. But certainly we are nowhere near to that creative ability today. And since most valuable data products involve large amounts of creativity in their production, it remains beyond the reach of the data fabric to automatically create them.

Nonetheless, there are many products that are bought at a store that involve little-to-no human involvement. For example, once the basic design of a phone case is complete, creating a new phone case product for a particular new phone that comes out is almost entirely automatable based on the size and specifications of the new phone. Or once a sports team introduces a new logo, the process of creating a new T-shirt product containing this logo is also almost entirely automatable. There are a non-trivial number of simple products that we buy today that do involve little human involvement in their production.

Similarly, there are a non-trivial number of data products that can be automatically created and still have value. This is especially true for “source-aligned data products” — data products that simply package up a source dataset without performing any cleaning, transformation, or integration activities, and make it available to the rest of the enterprise. The process of creating derived data products based on this source-aligned data product is in many cases best left to human teams — especially human teams of domain experts using data mesh practices. Nonetheless, in order to get started in creating the derived data product, the source-aligned data product must exist in the first place. By accelerating the process of creating these initial data products, the data fabric makes a positive contribution by providing a speedily-constructed foundation from which more use-case specific consumer-aligned data products can be generated.

Furthermore, even when a human team needs to get involved in creating a data product, the data fabric can make their lives easier and reduce their burden. While the human team needs to make the important decisions, such as how to clean up dirty data, choosing which datasets to combine, which foreign references to create, etc., the data fabric can accelerate the processes of getting the product incorporated into enterprise-catalogs, finding customers for the data product, and deploying the dataset for ad-hoc analysis and self-serve access.

Data Fabric, Data Mesh, and Data Products

So the bottom line is that yes, the data fabric can — to some degree — automate the creation of data products. However, it is not a best-practice to let the data fabric do so by itself, except for the most simple of data products. Auto-generated data products are similar to auto-generated articles that we find online describing the result of a sports game or a wall street earnings release — we appreciate the access to the basic data included within, but they are no replacement for the large amount of value that humans can add to the process. When writing about the data fabric vs. the data mesh, I mentioned that although these approaches have some contradictory philosophies, they are not mutually exclusive approaches. When it comes to the creation of data products, they are actually quite complementary: the data fabric takes the more dominant role in creating source-aligned data products that require less human involvement, and the data mesh takes the more dominant role in creating “consumer-aligned” data products.